Neural Speech Decoding

Project Summary

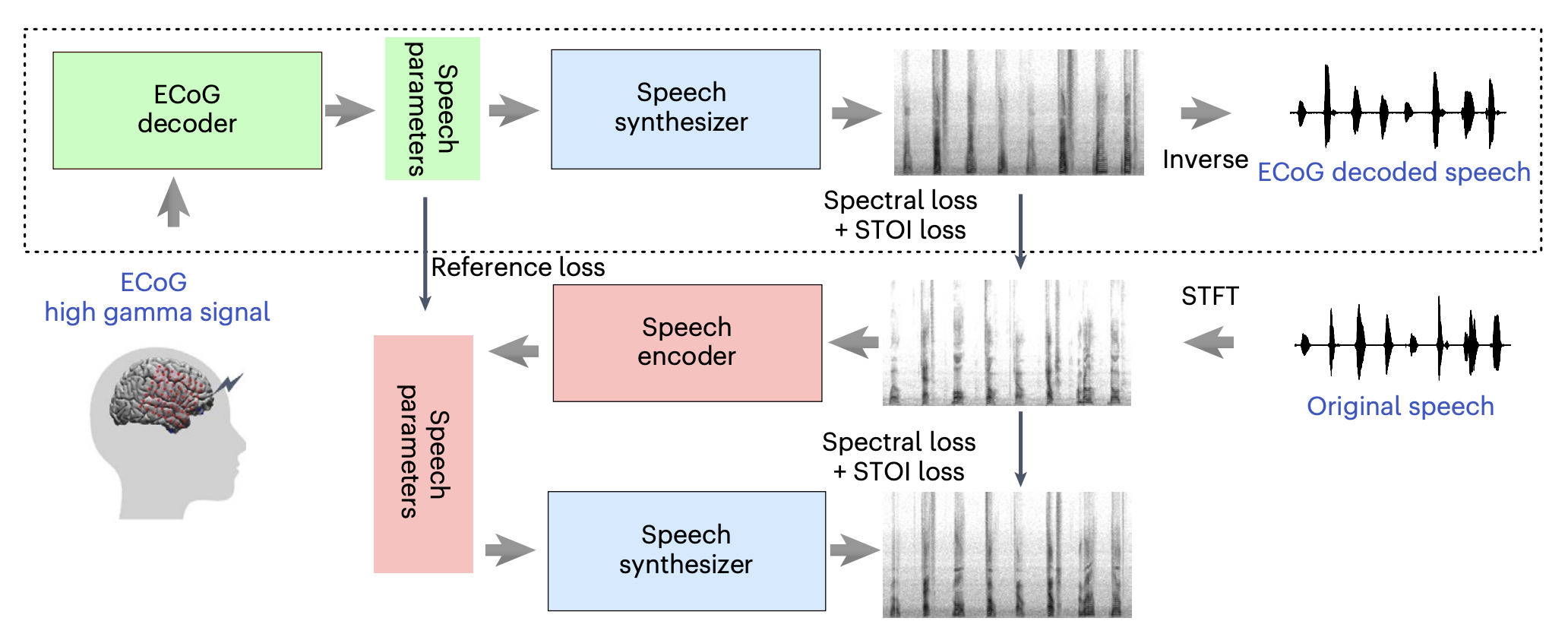

Decoding human speech from neural signals is essential for brain–computer interface (BCI) technologies that aim to restore speech in populations with neurological defcits. However, it remains a highly challenging task, compounded by the scarce availability of neural signals with corresponding speech, data complexity and high dimensionality. Here we present a novel deep learning-based neural speech decoding framework that includes an ECoG decoder that translates electrocorticographic (ECoG) signals from the cortex into interpretable speech parameters and a novel diferentiable speech synthesizer that maps speech parameters to spectrograms.

Participants

Yao Wang, Principal Investigator

Adeen Flinker, Co-Principal Investigator

Amirhossein Khalilian-Gourtani, Postdoc

Xupeng Chen, Ph.D. student

Nika Emami, Ph.D. student

Chenqian Le, Ph.D. student

Tianyu He, Visiting student

Sponsor

This project is funded by the National Science Foundation (NSF).

Related Publications

- Chen, X., Wang, R., Khalilian-Gourtani, A., Yu, L., Dugan, P., Friedman, D., Doyle, W., Devinsky, O., Wang, Y. and Flinker. “A Neural Speech Decoding Framework Leveraging Deep Learning and Speech Synthesis“. In Nature Machine Intelligence, April 2024.

- Wang, Ran, Xupeng Chen, Amirhossein Khalilian-Gourtani, Leyao Yu, Patricia Dugan, Daniel Friedman, Werner Doyle, Orrin Devinsky, Yao Wang, and Adeen Flinker. “Distributed feedforward and feedback cortical processing supports human speech production.” in Proceedings of the National Academy of Sciences 120, no. 42 (2023): e2300255120.

Explore the available code for this project on this GitHub page.